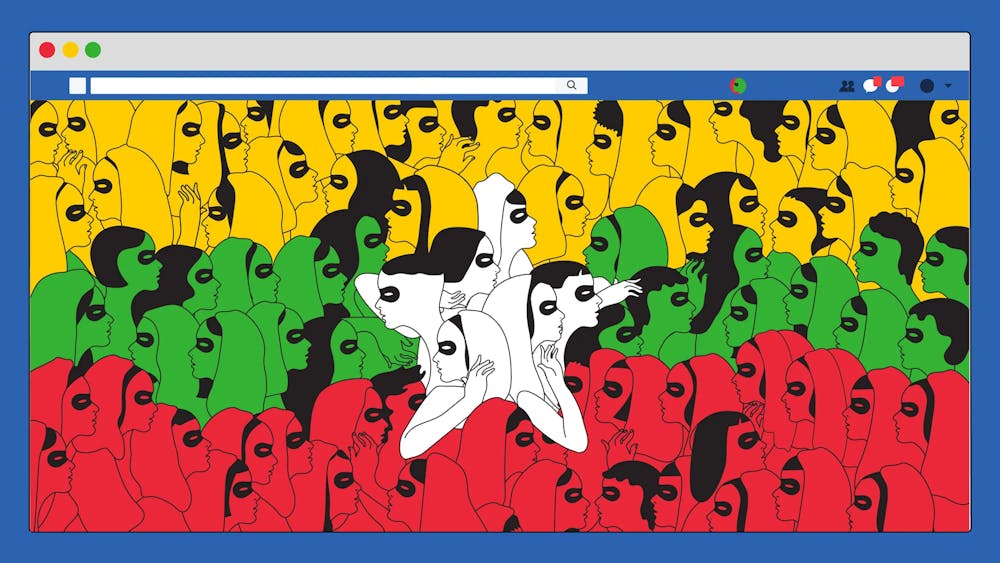

Myanmar, once known as "the land of temples and pagodas," has been grappling with a dark reality. While the country boasts beautiful landscapes, it has also been stained by the persecution of the Rohingya Muslims, a long-marginalized ethnic minority.

This crisis culminated in an episode of ethnic cleansing, displacing over 700,000 Rohingyas. While freedom of the press has been scarce in Myanmar, the arrival of Facebook in 2010 marked a significant turning point; however, not for the better.

This social media giant, intended to connect people, instead became a weapon used to incite violence against the Rohingya.

The Rohingya people have faced decades of discrimination and exclusion in Myanmar. Denied citizenship, they are considered stateless and vulnerable. Tensions escalated in the 1970s under military rule, leading to forced displacements and violence against the Rohingya.

This tragic pattern continued, with major outbreaks occurring in 2012 and 2017. The 2017 violence, described by the UN as "ethnic cleansing," forced hundreds of thousands of Rohingya to flee to neighboring Bangladesh, creating the world's largest refugee crisis.

Before 2013, Myanmar was one of the most isolated countries due to military control. However, with a shift towards a quasi-civilian government, internet access became more accessible. The price of SIM cards plummeted, leading to a surge in mobile phone ownership.

Facebook, with its messaging features, newsfeeds, and entertainment options, saw explosive growth. By 2018, it boasted over 18 million users in Myanmar, with a report suggesting 78% relied on Facebook for their news. Thant Sin, a digital rights activist, aptly described Facebook as "the internet" for many in Myanmar.

Weaponizing the Platform

Unfortunately, Facebook's potential for connection turned into a breeding ground for hate speech. Military officials and nationalist groups exploited the platform to spread misinformation and demonize the Rohingya.

Hateful messages portrayed Rohingyas as dangerous outsiders, undeserving of rights. Content moderation was severely lacking. The Zawgyi script, incompatible with Facebook's systems, posed a significant challenge.

Inaccurate translations meant hate speech often went unchecked. For instance, a Burmese post calling for the killing of Rohingyas was translated as "I shouldn't have a rainbow in Myanmar” to content moderators.

Enjoy what you're reading?

Signup for our newsletter

This lack of control allowed calls for violence to flourish. Examples included dehumanizing terms like "dog kalars" and "Bengali wh*res" used alongside accusations of terrorism against Rohingya Muslims.

The military even used Facebook to coordinate attacks, burning Rohingya villages down. Rumors spread like wildfire on the platform, inciting violence between Muslim and Buddhist communities.

An Amnesty Report revealed that a large portion of this hateful content had been present for years. Facebook wasn't just a passive platform; its algorithms actively amplified hate speech, creating a digital echo chamber of violence.

While Facebook played a central role, it wasn't the only platform involved. Twitter, though less popular, saw a surge in activity after the 2017 attacks. Wirathu, an extremist nationalist monk, found refuge on Twitter after being banned from Facebook.

Similarly, VK, a pro-Russian platform, emerged as a haven for those banned from Facebook, including Myanmar's military leader.

David Madden, a tech entrepreneur, warned Facebook officials in 2015 about the platform's misuse. Unfortunately, his warnings fell on deaf ears.

As the crisis unfolded, pressure mounted on Facebook. In 2018, Senator Leahy questioned Mark Zuckerberg about Facebook's role in inciting violence. Meta, Facebook's parent company, eventually admitted its failings and promised improvements, including hiring more Burmese content reviewers.

However, legal action followed. Human rights groups in the US and UK and Rohingya individuals filed lawsuits against Meta, seeking compensation exceeding $190 billion. Sawyeddollah, a Rohingya activist, emphasized the need for not just reparations but also education to rebuild shattered lives. As of 2024, no reparations have been made.

The Rohingya crisis remains unresolved. The dream of a peaceful life in their homeland seems distant.

Facebook, intended to connect the world, became a tool for division and violence. This tragedy serves as a stark reminder of the immense responsibility held by social media platforms.